For a long time people believed that the Sun orbits our Earth. We now know better. And so it is with universality in computation. Consider the following statement:

``It can also be shown that any computation that can be performed on a modern-day digital computer can be described by means of a Turing machine. Thus if one ever found a procedure that fitted the intuitive notions, but could not be described by means of a Turing machine, it would indeed be of an unusual nature since it could not possibly be programmed for any existing computer.''

(J.E. Hopcroft and J.D. Ullman, Formal Languages and their Relations to Automata, Addison-Wesley, Reading, Massachusetts, 1969, p. 80.)

The first sentence in the above quote is clearly false, and well known counterexamples abound in the literature. The second sentence, on the other hand, is only half true. Indeed, it is perfectly justified to say that a computation that cannot be performed on a Turing Machine must be of an unusual nature. However, this does not mean that such a computation cannot be programmed on any existing computer. As shown in what follows, the existence of such a computation would instead mean that the Turing Machine is simply not universal and that the Church-Turing Thesis (whose validity is assumed implicitly in the above quote) is in fact invalid.

I have recently shown that the concept of a Universal Computer cannot be realized. Specifically, I exhibited instances of a computable function F that cannot be computed on any machine U that is capable of only a finite and fixed number of operations per step (or time unit). This remains true even if the machine U is endowed with an infinite memory and the ability to communicate with the outside world while it is attempting to compute F. It also holds if, in addition, U is given an indefinite amount of time to compute F.

The most general statement of my result is as follows:

Non-universality in computation: Given n spatially and temporally connected physical variables, X1, X2, ..., Xn, where n is a positive integer, and a function F(X1, X2, ..., Xn) of these variables, no computer can evaluate F for any arbitrary n, unless it is capable of an infinite number of operations per time unit.

Note that F is readily computable by a machine M capable of exactly n operations per time unit. However, this machine cannot compute F when the number of variables is n+1. While a second machine M' capable of n+1 operations per time unit can now compute the function F of n+1 variables, M' is in turn defeated by a function of n+2 variables. This continues forever.

This point deserves emphasis. While the function F(X1, X2, ..., Xn+1) = F1(X1), F2(X2), ..., Fn+1(Xn+1) is easily computed by M', it cannot be computed by M. Even if given infinite amounts of time and space, machine M is incapable of simulating the actions of M'. Furthermore, machine M' is in turn thwarted by F(X1, X2, ..., Xn+2), a function computable by a third machine M''. This continues indefinitely. Therefore no computer is universal if it is capable of exactly T(i) operations during time unit i, where i is a positive integer, and T(i) is finite and fixed once and for all (for it will be faced with a computation requiring V(i) operations during time unit i, where V(i) > T(i) for all i).

Examples of such function F occur in:

1. Computations with time-varying variables: The variables, over which the function is to be computed, are themselves changing with time.

2. Computations with time-varying computational complexity: The computational complexity of the function to be computed is itself changing with time.

3. Computations with rank-varying computational complexity: Given several functions to be computed, and a schedule for computing them, the computational complexity of a function depends on its position in the schedule.

4. Computations with interacting variables: The variables of the function to be computed are parameters of a physical system that interact unpredictably when the system is disturbed.

5. Computations with global mathematical constraints: The function to be computed is over a system whose variables must collectively obey a mathematical condition at all times.

6. Computations with uncertain time constraints: There is uncertainty with regards to the input (when and for how long are the input data available), the calculation (what to do and when to do it), and the output (the deadlines are undefined at the outset); furthermore, the function that resolves each of these uncertainties itself has demanding time requirements.

7. Computations with global variables: A simple example that disproves the universality principle, in which all that is needed is a single pre-existing global variable whose value is modified by the computation itself. In addition, this paradigm offers a new dimension for separating the computable from the uncomputable, while illustrating the power of parallelism in computation.

For instance, suppose that the Xi are themselves functions that vary with time. It is therefore appropriate to write the n variables as X1(t), X2(t), ..., Xn(t), that is, as functions of the time variable t. Further assume that, while it is known that the Xi change with time, the actual functions that effect these changes are not known (for example, Xi may be a true random variable). The problem calls for computing Fi(Xi(t)), for i = 1, 2, ..., n, at time t = t0, where each Fi is a simple function of one variable that takes one time unit to compute. Specifically, let Fi(Xi(t)) simply represent the reading of Xi(t) from an external medium. The fact that Xi(t) changes with the passage of time means that, for k > 0, not only is each value Xi(t0 + k) different from Xi(t0), but also the latter cannot be obtained from the former. No computer capable of fewer than n read operations per time unit can solve this problem.

We note in passing that the above example is deliberately simple in order to convey the idea. Reading a datum, that is, acquiring it from an external medium, is the most elementary form of information processing. Any computer must be able to perform such operation. This simplest of counterexamples suffices to establish non-universality in computation. Of course, if one wishes, the computation can be made more complex, at will. While our main conclusion remains unchanged, for some, a more complex argument may sound more convincing. Thus, for example, we may add arithmetic by requiring that Fi(Xi(t)) call for reading Xi(t) and incrementing it by one, for i = 1, 2, ..., n, at time t = t0. Reading Xi(t), incrementing it by one, and returning the result takes one time unit.

In any case, a computer M capable of n operations per time unit (for example, one with n processors operating in parallel) can compute all the Fi(Xi(t)) at t = t0 successfully. A computer capable of fewer than n operations per time unit fails to compute all the Fi(Xi(t)) at t = t0. Indeed, suppose that a computer is capable of n-1 operations per time unit. It would compute n-1 of the Fi(Xi(t)) at t = t0 correctly. Without loss of generality, assume that it computes Fi(Xi(t)) at t = t0 for i = 1, 2, ..., n-1. Now one time unit would have passed, and when it attempts to compute Fn(Xn(t0)), it would be forced to incorrectly compute Fn(Xn(t0 + 1)).

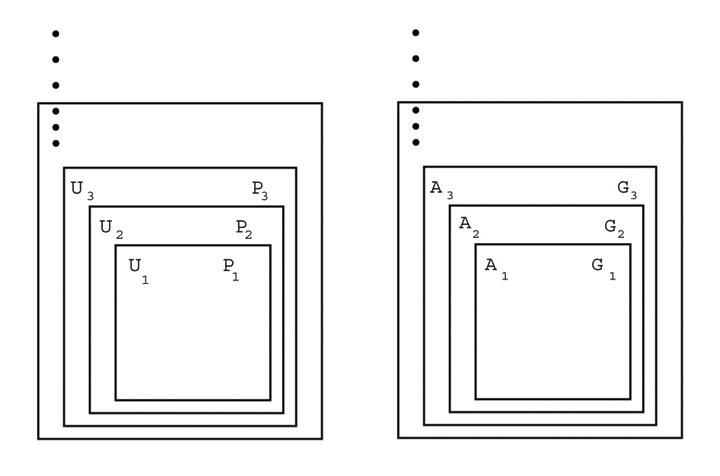

Is computer M universal? Certainly not. For when the number of variables is n+1, M fails to perform the above computation. As stated above, a succession of machines M', M'', ..., each succeeds at one level only to be foiled at the next. The implication to the theory of computation is akin to that of Gödel's incompleteness theorem to mathematics. In the same way as no finite set of axioms Ai can be complete, no computer Ui is universal that can perform a finite and fixed number of operations per time unit. This is illustrated below: For every set of axioms Ai there exists a statement Gi not provable in Ai, but provable in Ai+1; similarly, for every machine Ui there is a problem Pi not solvable on Ui, but solvable on Ui+1.

This result applies not only to idealized models of computation, such as the Turing Machine, the Random Access Machine, and the like, but also to all known general-purpose computers, including existing conventional computers (both sequential and parallel), as well as contemplated unconventional ones such as biological and quantum computers. It is true for computers that interact with the outside world to read input and return output (unlike the Turing Machine, but like every realistic general-purpose computer). It is also valid for computers that are given unlimited amounts of time and space to perform their computations (like the Turing Machine, but unlike realistic computers). Even accelerating machines that increase their speed at every step (such as doubling it, or squaring it, or any such fixed acceleration) at a rate that is set in advance, cannot be universal!

The only constraint that we have placed on the computer (or model of computation) that claims to be universal is that the number of operations of which it is capable per time unit be finite and fixed once and for all. In this regard, it is important to note that:

1. The requirement that the number of operations per time unit, or step, be finite is necessary for any "reasonable" model of computation [see, for example, M. Sipser, Introduction to the Theory of Computation, PWS Publishing Company, Boston, Massachusetts, 1997, p. 141];

2. The requirement that this number be fixed once and for all is necessary for any model that purports to be "universal" [see, for example, D. Deutsch, The Fabric of Reality, Penguin Books, London, England, 1997, p. 210].

Without these two requirements, the theory of computation in general, and the theory of algorithms, in particular, would be totally irrelevant.

The consequences to theoretical and practical computing are significant. Thus the conjectured "Church-Turing Thesis" is false. It is no longer true that, given enough time and space, any single general-purpose computer, defined a priori, can perform all computations that are possible on all other computers. Not the Turing Machine, not your laptop, not the most powerful of supercomputers. In view of the computational problems mentioned above (and detailed in the papers below), the only possible universal computer would be one capable of an infinite number of operations per time unit.

In fact, this work has led to the discovery of computations that can be performed on a quantum computer but not on any classical machine (even one with infinite resources), thus showing for the first time that the class of problems solvable by classical means is a true subset of the class of problems solvable by quantum means. Consequently, the only possible universal computer would have to be quantum (as well as being capable of an infinite number of operations per time unit).

For details, please see the references.

What follows are some misconceptions and criticisms relating to my non-universality result, and responses to them. They are presented as a dialog with an interlocutor who, I assume, has read my papers listed below on the myth of universal computation. The presentation concludes with a challenge.

Misconception 1: "So, you describe a number of functions that are uncomputable. What's new about that? Uncomputable functions have been known since the time of Turing."

Response: This is incorrect. The functions that I describe are eminently computable. In my papers listed below, every function F of n variables can be easily evaluated by a computer capable of at least n elementary operations per time unit (an n-processor parallel computer, for example). However, a computer capable of only n-1 or fewer elementary operations per time unit cannot compute F. Non-universality in computation immediately follows by simple induction.

Misconception 2: "You are proposing computations that cannot be simulated efficiently. What's new about that? Your own book Parallel Computation: Models and Methods (Prentice Hall 1997) and your earlier papers presented such computations that can be performed in constant time by n processors, but require time exponential in n when executed on n-1 or fewer processors."

Response: The error in the statement above is in the phrase "cannot be simulated efficiently". Indeed, my non-universality result does not follow from computations that cannot be simulated efficiently. It follows from computations that cannot be simulated at all. Thus, for each of the functions F of n variables that I describe in my papers listed below, no computer capable of fewer than n elementary operations per time unit can simulate the actions of a computer capable of n elementary operations per time unit. The latter computer is capable of evaluating F successfully, the former is not capable of doing so, even if given an infinite amount of time and memory space. It is this impossibility of simulation that leads to non-universality.

Misconception 3: "What do you think about this way of attacking your time-varying variables problem: First of all, we should separate the tasks into sensing and computation tasks. That means, I assume that there are sensors equipped with memory which read the values of the variables at time t and write them into appropriate memory locations. So, for every t we have memory locations x_1(t), ..., x_n(t). Furthermore, assume that the values of n and t are stored in some other variables. Then, a universal machine would read the value of n (and t), read the values from memory and perform the requested computations on these values, which is a rather simple task.

So, the main argument of this is that we allow for this separation. The sensors are peripheral components, which do not perform any computations, but "just" provide the input for the computation, i.e. they sense their values simultaneously at time t and store them in their respective memory locations, and the computations can access these values later on.

I guess that you will argue that this requires n values to be stored in the memory of the machine at the same time which would contradict a specification of a machine which is independent of n. But if we can reduce the problem you posed to this separation of concerns, we would have the consistence with the traditional theory of computation except for the addition of these sensors, which are not considered to be a part of the universal computing device but of the input specification."

Response: Surely, you cannot "separate" part of the universal computer (in this case the input unit) from the rest of the computer just to fit the problem. The universal computer is one unit, and a computational step is: [read, calculate, write].

The definition of `to compute' as `the process of transforming information' applies to all three phases of computation:

1. The input phase, where data such as keystrokes, mouse clicks, or temperatures are reduced, for example, to binary strings;

2. The calculation phase, where data are manipulated through arithmetic and logic operations, as well as operations on strings, and so on; and

3. The output phase, where data are produced as numbers on a screen, or rendered as images and sounds, for instance.

Each of the three phases represents information transformation, is an integral part of every computation, and no computation worthy of that name can exclude any of them. In particular, input and output are fundamental in the design and analysis of algorithms. Input-sensitive and output-sensitive computations often play an especially important role in deriving lower and upper bounds on algorithmic complexity.

One of the most dramatic illustrations of the interweaving of input and output with calculation is the well-known linear-time planarity testing algorithm (J. Hopcroft and R. Tarjan, Efficient planarity testing, Journal of the ACM, Vol. 21, No. 4, 1974, pp. 549--568.) In order to determine whether a graph with V vertices is planar, Step 1 of that algorithm avoids a potentially quadratic-time computation, by reading in the edges of the graph one at a time from the outside world; if there is one more edge than 3V-6, the graph is declared non-planar.

But let's assume you have set up n sensors and succeeded in solving the problem. What happens when you discover, the next morning, that there are now, not n, but n+1 inputs? Do you think it is a fair solution for you to go to the sensor shop, buy one more sensor and rush back to attach it to the extra input source and to the computer? And even if you did, what if by the time you return, the time T at which the result is needed would have passed?

Misconception 4: "Your argument is great. It is simple and effective. However, it doesn't show that there is something theoretically flawed with the concept of a universal computer; it shows that a universal computer could never be physically realized. So the Church-Turing thesis is alright if we take it that the space/time needed is infinite. Having said that, I imagine that the distinction between the theoretical and the implementation claim is often overlooked, which makes the proof integral in making that distinction very sharp indeed."[From N.]

Response: Thank you for reading. But, sorry, I beg to differ. Even if given infinite space and infinite time (which we allow the Turing Machine anyway), no computer that you define once and for all (and are not allowed to change ever again) can solve the problems that I define.

The issue is not with infinite space and infinite time. The issue is: How many operations can you do in one slice of time? You have to define this for every theoretical (and of course practical) computer. Otherwise, analysis of algorithms becomes meaningless, and the running time of a program a void concept. For example, the Turing Machine can do one, two, or three operations per slice of time, depending on your definition, but it is always a finite number. It can read a symbol, then change state, or write a symbol, or move left or right or stay put. Then a new iteration starts. It cannot do an arbitrary number of these fundamental operations per slice of time (you may call the latter a step, an iteration, a time unit, whatever). In passing, I should say that it is this finiteness of the number of operations per time unit that caused the great success of computer science, making the Computer the greatest invention of the 20th century: A machine designed once and for all that can simulate any operation by another machine. In theory, you should be able to buy a computer and use it for the rest of your life, for it will never be faced with a computable function that it cannot compute. Or so we thought ...

Suppose you have defined your machine once and for all (it can be a Turing Machine or a Supercomputer, or the chip in your digital camera or that in your toaster). I will now give you a computable function that it fails to compute. One example of such a computation is the problem of operating on variables that change with time (as described above). Even if given all the time in the world from here to eternity, even if given all the space in the Universe and beyond, you could not solve the problem, not even in theory with pen and paper. Why? Because you defined your machine and fixed it once and for all (as one should if one claims to have a Universal Computer that can simulate anything that another computer can compute). And herein lies the Achilles heel of the Universal Computer: I, as a designer of the problem, ask you for a definition of your purported Universal Computer; I then concoct a computation that thwarts it. This computation, of course, can be easily carried out by another "Universal Computer", but then I'll give you another problem, and so on.

There is nothing here about implementation. I put no limits on what your machine can do, except for one: It must do a finite number of operations per time unit. This number can be as big as you want, it can even be defined by a function of time, but it must be fixed the moment you define your model, and it cannot be infinite. Once you define it you cannot change it. We can play the game with paper and pencil. I will always win.

I believe that the result is perfectly theoretical as well as being perfectly practical.

Misconception 5: "While I could not make much sense of your "proof" (right off the bat, I do not think 'spacially and temporally connected variables' is well defined, although I also think it suffers from many other flaws) I decided to take your challenge seriously, within the bounds and the scope of your restrictions, language and assumptions, in an attempt to define a reasonable sounding computational device that would solve the time-varying variables computation. I will assume that the variables appear each on a luminous display of some sort. I am going to assume relativity (please let me know if spacially and temporally connected variables is supposed to mean something else?). There is space "k" between displays so that, when they update their values, the light from their screens arrive at my computational device at nearly, but not precisely the same time (determined by 'k' and where my device is in the 'room'). I will move my computational device close to the displays so that I can exploit the hypotenuse the signals from each display must travel first to reach the sensor of my device. Then, it is simply a matter of setting the processing speed to a finite value around 'a constant * k meters / the speed of light.' In this way, my computational device is able to compute the function F as desired. My device has one sensor. It only needs one sensor, since the signals from the displays come in sequentially. Remember that the displays are separated by a distance and the signal from each display, after they update, must travel this distance (at the speed of light). So my device uses its one sensor to read in a display and then it reuses that sensor some time later after the signal from the next display has reached it (some time later)."

Response: Right off the bat, as is clear from my papers, the spatial and temporal relationship among the variables is such that:

1) The variables occupy the same region in physical space; specifically, the distance separating any two variables is no larger than a small constant (whose magnitude depends on the general paradigm under consideration);

2) The variables are constrained by a unique parameter representing true physical time.

Now turning to your solution, I would say it is original; unfortunately, I am afraid you are missing the point of the exercise.

The problems I pose are to be treated independently of specific physical properties. For example, the time-varying variables could be anything one would want them to be (atmospheric pressures for instance, or humidity readings, etc.), and they should not necessarily be on display (they would need to be acquired, that is, measured, first). Light rays, the basis of your argument, should play no role in the solution (for they may not help in general).

However, let's consider your one-sensor solution, assuming the variables are indeed displayed. The difficulty with such setups is that they inevitably break down at some point as the problem scales up. Specifically,

1) The dimensions of the sensor are fixed.

2) As the number of variables, and hence displays, N, grows, the angle of the light rays from the furthest display approaches 0. There will not be enough real estate on the sensor for that light to impinge upon.

3) Thus, the problem poser can make N sufficiently large so as to render the sensor useless.

And one more thing: How does your sensor handle multiple simultaneous (or perhaps overlapping) inputs from displays all equidistant from it?

Criticism 1: "Your definition of computation is unconventional."

Response: Absolutely, if one considers unconventional the passage of time, or the interactions among the constituents of the universe subject to the laws of nature, or for that matter any form of information processing. In any case, my definition of computation may be unconventional, but it is not unrealistic.

Besides, it is important to realize that defining "to compute" as "what the Turing Machine does", which is quite commonly done (see, for example, L. Fortnow, The enduring legacy of the Turing machine, http://ubiquity.acm.org/article.cfm?id=1921573), leads to a logical reasoning that is viciously circuitous. If computation is what the Turing Machine does, then clearly the Turing Machine can compute anything that is computable. Furthermore, the "Church-Turing Thesis" would move immediately from the realm of "conjecture" to that of "theorem". The fact that it has not done so to this day, is witness to the uncertainty surrounding the widespread definition of "computation". As stated above, my counterexamples, by contrast, disprove the "Church-Turing Thesis".

Criticism 2: "But the Turing Machine was not meant for this kind of computation."

Response: Precisely. Furthermore, the non-universality result covers all computers, not just the Turing Machine.

Criticism 3: "Abstract models of computation do not concern themselves with input and output."

Response: This opinion is held by those who believe that computation is the process that goes from input to output, while concerning itself with neither input nor output (see, for example, Fortnow cited above). By analogy, eating is all about digestion, and a Moonlight Sonata interpretation is nothing but the hitting of piano keys. Perhaps.

Perhaps not. A model of computation is useful to the extent that it is a faithful reflection of reality, while being mathematically tractable. In computer science, input and output are not cumbersome details to be ignored; they are fundamental parts of the computation process, which must be viewed as consisting of three essential phases, namely, input, calculation, and output. A computer that does not interact with the outside world is useless. In that sense, the thermostat in your house is more powerful than the Turing Machine. Please remember that my result goes beyond the Turing Machine (which, by the way, is a very primitive model of computation). To ask computer scientists to stick to the Turing Machine and not to look beyond, would be as if physics stopped progressing beyond the knowledge of the Ancient Greeks.

Criticism 4: "Classical computability theory does not include variables that change with time."

Response: This is a serious lacuna in classical theory. My work shows that there are in fact many such lacunae. Their result is to severely restrict the definition of computation. Indeed, to define computation merely as function evaluation, with fixed input and fixed output, is unrealistic and naive. It also trivializes the Church-Turing Thesis (turning it into a tautology), because it necessarily leads to the kind of sterile circular reasoning mentioned above.

Having said that, the time-varying variables counterexample is only one of many such refutations of universality in computation. Other examples arise in the computations listed above and detailed in the publications below.

Criticism 5: "The Church-Turing Thesis applies only to classical computations."

Response: This is certainly not the case. As the multitude of examples listed in my paper amply demonstrate, the commonly accepted statement of the Church-Turing Thesis is essentially this: "There exists a Universal Computer capable of performing any computation that is possible on any other computer." There are no restrictions, exceptions, or caveats whatsoever on the definition of computation. In fact, a typical textbook definition of computation is as follows: "A computation is a process that obeys finitely describable rules." [See Rucker below] What's more, it is suggested in every textbook on the subject that, thanks to the fundamental and complementary notions of simulation and universality, every general-purpose computer is universal: A Turing Machine, a Random-Access Machine, a Personal Computer, a Supercomputer, the processing chip in a cell phone, are all universal. (My result shows this claim to be false.)

Going a little further, many authors consider all processes taking place in the Universe as computations. Interested readers may consult:

E.B. Davies. Building infinite machines. British Journal for Philosophy of Science, 52:671--682, 2001.

D. Deutsch, The Fabric of Reality, Penguin Books, London, England, 1997.

J. Durand-Lose. Abstract geometrical computation for black hole computation. Research Report No. 2004-15, Laboratoire de l'Informatique du Parallelisme, Ecole Normale Superieure de Lyon, Lyon, France, April 2004.

J. Gleick. The Information: A History, a Theory, a Flood. HarperCollins, London, 2011.

K. Kelly. God is the machine. Wired, 10(12), 2002.

S. Lloyd. Programming the Universe. Knopf, New York, 2006.

S. Lloyd and Y.J. Ng. Black hole computers. Scientific American, 291(11):53--61, November 2004.

R. Rucker. The Lifebox, the Seashell, and the Soul. Thunder's Mouth Press, New York, 2005.

C. Seife. Decoding the Universe. Viking Penguin, New York, 2006.

T. Siegfried. The Bit and the Pendulum. John Wiley & Sons, Inc., New York, 2000.

F.J. Tipler. The Physics of Immortality: Modern Cosmology, God and the Resurrection of the Dead. Macmillan, London, 1995.

T. Toffoli, Physics and Computation, International Journal of Theoretical Physics, 21:165--175, 1982.

V. Vedral. Decoding Reality. Oxford University Press, Oxford, United Kingdom, 2010.

J.A. Wheeler. Information, physics, quanta: The search for links. In Proceedings of the 3rd International Symposium on Foundations of Quantum Mechanics in Light of New Technology, pages 354--368. Tokyo, 1989.

J.A. Wheeler. At Home in the Universe. American Institute of Physics Press, Woodbury, New York, 1994.

S. Wolfram. A New Kind of Science. Wolfram Media, Champaign, Illinois, 2002.

K. Zuse. Calculating Space. MIT Technical Translation AZT-70-164-GEMIT, Massachusetts Institute of Technology (Project MAC), Cambridge, Mass. 02139, 1970.

I happen to agree with the above authors on the pervasive nature of computation. However, in order to reach my conclusion about non-universality in computation, I do not in fact need to go that far. My counterexamples use very simple computations to refute universality: computations whose variables change with time, computations whose variables affect one another, computations the complexity of whose steps changes with the passage of time, computations the complexity of whose steps depends (not on the time but) on the order of execution of each step, computations that involve variables obeying certain mathematical conditions, and so on. These are not uncommon computations. They may be considered unconventional only when contrasted with the standard view of computation as a rigid function evaluation, in which, given a variable x, it is required to compute f(x), in isolation of the rest of the world.

Criticism 6: " Actually, Alan Turing knew that computations involving physical time would cause problems for his machine."

Response: This is the back-pedaling argument par excellence. Perhaps Turing knew about the computations that I describe here, but I doubt it. The Church-Turing Thesis is proof that he and Church were convinced of the universality of the Turing Machine. In any case, I am not aware of any writings by Turing, von Neumann, or anybody else, that hint to non-universality in computation, prior to my January 2005 paper on the myth of the universal computer.

This type of argument, exemplified by Criticism 6, reminds me of the words of the American philosopher William James:

"First, you know, a new theory is attacked as absurd; then it is admitted to be true, but obvious and insignificant; finally it is seen to be so important that its adversaries claim that they themselves discovered it."

(William James, Pragmatism: A new name for some old ways of thinking, Lecture 6: Pragmatism's conception of truth, Longman Green and Co., New York, 1907, pp. 76--91.)

Criticism 7: "The problem I see with the non-universality claim is that it does not appear to be falsifiable. It might be improved by thinking further about how to make the claim more specific, clear, testable, well-defined and worked out into detail. The entire claim would ideally be described in a few very worked out, specific, sentences - needing little external exposition or justification."

Response: Have you had a chance to read any of my papers? There is no lack of mathematical formalism there. For example in one of my papers, M. Nagy and I used quantum mechanics to illustrate the first five of the seven aforementioned paradigms. I am certain you will enjoy that one (see TR 2007-537).

But let me try this since you would like something simple and crisp. How about the best understood problem in computer science, arguably sorting, but with a twist? Here is the problem:

For a positive even integer n, where n is greater than or equal to 8, let n distinct integers be stored in an array A with n locations A[0], A[1], ..., A[n - 1], one integer per location. Thus A[j], for all j = 0, 1, ..., n-1, represents the integer currently stored in the jth location of A. It is required to sort the n integers in place into increasing order, such that:

1. After step i of the sorting algorithm, for all i greater than or equal to 1, no three consecutive integers satisfy: A[j] > A[j + 1] > A[j + 2] for all j = 0, 1, ..., n-3.

2. When the sort terminates we have: A[0] < A[1] < ... < A[n - 1].

Can you solve this problem using a universal computer, one that is fixed once and for all? This is an example of computations on which a mathematical condition is to be obeyed at every step. (The answer is in my TR 2011-580.)

Criticism 8: "A fine student just presented your Non-Universality in Computation work in my graduate theory course. Going simply on her presentation, I am not impressed. Your page starts by quoting Hopcroft and Ullman and saying that it is clearly false. You took this quote out of the context of all of theoretical computer science which clearly defines that a `computation' is to start with the entire input presented and is given as much time as it wants to read this input and do its computation. It is true that since Turing, the nature of computation has changed to require real time interactions with the world. But you should not misrepresent past work. Having not studied your arguments at length, the only statement that I have gotten is that a machine is unable to keep up if you only allowed it to read in a fixed number of bits of information per time step and you throw at it in real time an arbitrarily large number of bits of information per time step. In itself, this statement is not very deep."

Response: I am sure the student did a wonderful job, but somehow the message did not get across. I will take your remarks one by one.

Remark A: "You took this quote out of the context of all of theoretical computer science which clearly defines that a `computation' is to start with the entire input presented and is given as much time as it wants to read this input and do its computation."

Response to Remark A:

1) I did not find your definition of `computation' anywhere. It is certainly not in Hopcroft and Ullman's book.

2) Your definition of `computation' applies narrowly to some primitive computational models, such as the Turing Machine, Cellular Automata, and so on.

3) Because of (2), your definition trivializes the Church-Turing Thesis, rendering it a tautology: By defining `computation' as `what the Turing Machine does', it obviously follows that `the Turing Machine can compute everything that is computable'. As mentioned earlier (see the response to Criticism 1), this is a typical example of circular reasoning.

4) Your definition is not sufficiently general to capture the richness and complexity of the notion of `computation'. Others have proposed more encompassing definitions of `computation'. Here are a few quotes:

"In a sense Nature has been continually computing the `next state' of the universe for billions of years; all we have to do - and actually, all we can do - is `hitch a ride' on this huge ongoing computation." Tommaso Toffoli, Physics and Computation, International Journal of Theoretical Physics, 21, 1982, 165-175.

"Computation is physical; it is necessarily embodied in a device whose behaviour is guided by the laws of physics and cannot be completely captured by a closed mathematical model. This fact of embodiment is becoming ever more apparent as we push the bounds of those physical laws." Susan Stepney, The neglected pillar of material computation, Physica D, 237(9):1157--1164, 2004.

"Information sits at the core of physics ... every particle, every field of force, even the space-time continuum itself derives its function, its meaning, its very existence entirely ... from answers to yes-or-no questions ... all things physical are information-theoretic in origin ... " John Archibald Wheeler, Information, physics, quantum: The search for links, in: W. Zurek (ed.) Complexity, Entropy, and the Physics of Information, Addison-Wesley, Redwood City, CA, 1990.

"Think of all our knowledge-generating processes, our whole culture and civilization, and all the thought processes in the minds of every individual, and indeed the entire evolving biosphere as well, as being a gigantic computation. The whole thing is executing a self-motivated, self-generating computer program." David Deutsch, The Fabric of Reality, Penguin Books, London, England, 1997.

5) And one more thing about your point that a computation is to "start with the entire input presented". Since all of my counterexamples indeed require the entire input to be "present", I will assume that you mean "start with the entire input residing in the memory of the computer". (Incidentally, some of my counterexamples assume the latter as well.) The relevant point here is this: Hopcroft himself has a well-known algorithm that makes no such assumption about the entire input residing in memory. As stated in response to Misconception 3 above, one of the most dramatic illustrations of the interweaving of input and output with calculation is the well-known linear-time planarity testing algorithm of Hopcroft and Tarjan. In order to determine whether a graph with n vertices is planar, Step 1 of that algorithm avoids a computation that could potentially run in time at least quadratic in n, by reading in the edges of the graph one at a time from the outside world; if there is one more edge than the absolute maximum stipulated by Euler's formula, namely 3n - 6 (the paper actually uses the loose bound 3n - 3 in order to allow for n < 3), the graph is declared non-planar.(J. Hopcroft and R. Tarjan, Efficient planarity testing, J. ACM 21(4), 1974, pp. 549-568.)

Remark B: "It is true that since Turing, the nature of computation has changed to require real time interactions with the world. But you should not misrepresent past work."

Response to Remark B:

1) I am glad to see that you agree with me about the nature of computation. You should know, however, that my counterexamples to universality are not all about "real time interaction with the world". There is a list of such counterexamples at the beginning of this article, and a list of references to my papers near the end of the article. One counterexample involving mathematical constraints (it is a variant of sorting, in which the entire input is available in memory at the outset of the computation) is described in my response to Criticism 7. Note also that my nonuniversality result applies to putative `universal computers' capable of interaction with the outside world. These `universal computers' are endowed with unlimited memories and are allowed an indefinite amount of time to solve the problems they are given. They all still fail the test of universality.

2) However, I do take exception to the claim that I misrepresented past work. Below are quotes from famous computer scientists asserting, without caveat, exception, or qualification that a universal computer is possible (often explicitly stating that such a universal computer is the Turing Machine, and essentially taking for granted the unproven Church-Turing Thesis):

"A Turing machine can do everything that a real computer can do." M. Sipser, Introduction to the Theory of Computation, PWS Publishing Company, Boston, MA, 1997, p. 125.

"Anything which can be computed can be computed by a Turing Machine." S. Abramsky et al, Handbook of Logic in Computer Science, Clarendon Press, Oxford, 1992, p. 123.

"It is theoretically possible, however, that Church's Thesis could be overthrown at some future date, if someone were to propose an alternative model of computation that was publicly acceptable as fulfilling the requirement of `finite labor at each step' and yet was provably capable of carrying out computations that cannot be carried out by any Turing machine. No one considers this likely." H.R. Lewis and C.H. Papadimitriou, Elements of the Theory of Computation, Prentice Hall, Englewood Cliffs, NJ 1981, p. 223.

"...if we have shown that a problem can (or cannot) be solved by any TM, we can deduce that the same problem can (or cannot) be solved by existing mathematical computation models nor by any conceivable computing mechanism. The lesson is: Do not try to solve mechanically what cannot be solved by TMs!" D. Mandrioli and C. Ghezzi, Theoretical Foundations of Computer Science, John Wiley, New York, NY, 1987, p. 152.

"...any algorithmic problem for which we can find an algorithm that can be programmed in some programming language, any language, running on some computer, any computer, even one that has not been built yet but can be built, and even one that will require unbounded amounts of time and memory space for ever-larger inputs, is also solvable by a Turing machine." D. Harel, Algorithmics: The Spirit of Computing, Addison-Wesley, Reading, MA, 1992, p. 233.

"It is possible to build a universal computer: a machine that can be programmed to perform any computation that any other physical object can perform. ... any computation that can be performed by any physical computing device can be performed by any universal computer, as long as the latter has sufficient time and memory." D. Hillis, The Pattern on the Stone, Basic Books, New York, NY, 1998, pp. 63 - 64.

Hundreds of such statements can be found in the literature. I provide a sample in TR 2006-511 (see below).

Finally, please note that to correct previous mistakes is not to misrepresent the past. This is how science advances. Newton, Darwin, and Einstein were giants who built great scientific edifices. Each edifice, magnificent yet incomplete.

Remark C: "Having not studied your arguments at length, the only statement that I have gotten is that a machine is unable to keep up if you only allowed it to read in a fixed number of bits of information per time step and you throw at it in real time an arbitrarily large number of bits of information per time step. In itself, this statement is not very deep."

Response to Remark C:

1) As mentioned above, the "time-varying variables" computation is but one of many counterexamples to universality.

2) As you well know, a reasonable model of computation must be finite. The Turing Machine has a finite alphabet, a finite set of states, and a finite set of elementary operations per step. To assume otherwise would render the fields of complexity theory and algorithm design and analysis useless.

3) Your characterization of my result is erroneous. I describe computable functions that no machine that claims to be universal can compute.

4) I ask you to define a "universal computer" fulfilling the requirement of `finite labor at each step' (to quote Lewis and Papadimitriou again), and I will give you a computable function that it cannot compute.

Which, finally, brings us to the challenge.

A Computational Challenge: Anyone who still does not accept my result, namely, that universality in computation is a myth, has but one option: to prove it wrong. In order to do this, one must exhibit a universal computer capable of a finite and fixed number of operations per time unit, on which any one of the computations in the following classes, and described in the papers below, can be performed:

1. Computations with time-varying variables

2. Computations with time-varying computational complexity

3. Computations with rank-varying computational complexity

4. Computations with interacting variables

5. Computations with global mathematical constraints

6. Computations with uncertain time constraints

7. Computations with global variables.

For a simplified (and perhaps more colorful) version of the challenge, please click here.

"Facts do not go away when scientists debate rival theories to explain them." Stephen Jay Gould, Evolution as fact and theory, Discover 2, May 1981, pp. 34 - 37.

Anyone planning to read the papers below, should read these quotes first; they provide the context. PDF (62.880 Kbytes)

This work has been published as follows (publications listed in reverse chronological order):

Journal papers

Akl, S.G., "Computational nonuniversality: Philosophical and artistic perspectives", LINKs (The Art of Linking, an annual transdisciplinary review), Special Edition 1, Unconventional Computing, Adamatzky, A., Ed., January 2021, pp. 8--15.

Akl, S.G., "A map of England, the simulator simulated, and nonuniversality in computation", International Journal of Unconventional Computing, Vol. 15, No. 3, 2020, pp. 219--223.

Akl, S.G., "A computational journey in the true north", International Journal of Parallel, Emergent and Distributed Systems, Special Issue on A Half Century of Computing, Adamatzky, A. and Watson, L. (eds.), Vol. 35, No. 2, 2020, pp. 132--142.

Akl, S.G., "Unconventional wisdom: Superlinear speedup and inherently parallel computations", International Journal of Unconventional Computing, Vol. 13, Nos. 4--5, 2018, pp. 283 - 307.

Akl, S.G., "Nonuniversality explained", International Journal of Parallel, Emergent and Distributed Systems, Vol. 31, Issue 3, May 2016, pp. 201 - 219.

Akl, S.G. and Salay, N., "On computable numbers, nonuniversality, and the genuine power of parallelism", International Journal of Unconventional Computing, Vol. 11, Nos. 3--4, 2015, pp. 283 - 297.

Akl, S.G., "What is computation?", International Journal of Parallel, Emergent and Distributed Systems, Vol. 29, Issue 4, August 2014, pp. 337 - 345.

Nagy, N. and Akl, S.G., "Computing with uncertainty and its implications to universality", International Journal of Parallel, Emergent and Distributed Systems, Vol. 27, Issue 2, April 2012, pp. 169 - 192.

Akl, S.G., "Time travel: A new hypercomputational paradigm", International Journal of Unconventional Computing, Vol. 6, No. 5, 2010, pp. 329 - 351.

Fraser, R. and Akl, S.G., "Accelerating machines: a review", International Journal of Parallel Emergent and Distributed Systems, Vol. 23, No. 1, February 2008, pp. 81 - 104.

Akl, S.G., "Unconventional computational problems with consequences to universality", International Journal of Unconventional Computing, Vol. 4, No. 1, 2008, pp. 89 - 98.

Akl, S.G., "Even accelerating machines are not universal", International Journal of Unconventional Computing, Vol. 3, No. 2, 2007, pp. 105 - 121.

Nagy, M. and Akl, S.G., "Quantum computing: Beyond the limits of conventional computation", International Journal of Parallel, Emergent and Distributed Systems, Special Issue on Emergent Computation, Vol. 22, No. 2, April 2007, pp. 123 - 135.

Nagy, M. and Akl, S.G., "Quantum measurements and universal computation", International Journal of Unconventional Computing, Vol. 2, No. 1, 2006, pp. 73 - 88.

Akl, S.G., "Three counterexamples to dispel the myth of the universal computer", Parallel Processing Letters, Vol. 16, No. 3, September 2006, pp. 381 - 403.

Conference papers

Akl, S.G., "Quantum exclusivity: Uniquely non-classical information processing", Theoretical and Foundational Problems in Information Studies Conference, a part of the International Society for the Study of Information (IS4SI) online Summit 2021, September 12 – 19, 2021. Nagy, N. and Akl, S.G., "Computations with uncertain time constraints: Effects on parallelism and universality", Proceedings of the Tenth International Conference on Unconventional Computation, Turku, Finland, June 2011, pp. 152--163.

Akl, S.G., "Gödel's incompleteness theorem and nonuniversality in computing", Proceedings of the Workshop on Unconventional Computational Problems, Sixth International Conference on Unconventional Computation, Kingston, Canada, August 2007, pp. 1 - 23.

Nagy, M. and Akl, S.G., "Parallelism in quantum information processing defeats the Universal Computer", Proceedings of the Workshop on Unconventional Computational Problems, Sixth International Conference on Unconventional Computation, Kingston, Canada, August 2007, pp. 25 - 52.

Nagy, M. and Akl, S.G., "On the importance of parallelism for quantum computation and the concept of a universal computer", Proceedings of the Fourth International Conference on Unconventional Computation, Sevilla, Spain, October 2005, pp. 176 - 190.

Book chapters

Akl, S.G., Exclusively quantum: Computations only possible on a quantum computer, in: Handbook of Unconventional Computing, Vol. 1, Adamatzky, A., Ed., World Scientific Publishers, London, U.K., 2021.

Akl, S.G., "Computational nonuniversality: Philosophical and artistic perspectives", in: Thoughts on Unconventional Computing, Adamatzky, A. and Lestocart, L.-J., Eds., Luniver Press, Beckington, U.K., 2021, pp. 10--17.

Akl, S.G., From parallelism to nonuniversality: An unconventional trajectory, in: From Astrophysics to Unconventional Computing, Adamatzky, A. and Kendon, V., Eds., Springer series on Emergence, Complexity and Computation, Springer International Publishing Switzerland, 2019, pp. 123--156.

Akl, S.G., Unconventional wisdom: Superlinear speedup and inherently parallel computations, in: From Parallel to Emergent Computing, Adamatzky, A. et al., Eds., Taylor & Francis, CRC Press, Boca Raton, Florida, 2019, pp. 347--366.

Akl, S.G., "Unconventional computational problems", in: Encyclopedia of Complexity and Systems Science, Myers, R.A., Ed., Springer, New York, 2018, pp. 631 - 639.

Akl, S.G., "Nonuniversality in computation: Fifteen misconceptions rectified", in: Advances in Unconventional Computing, Admatzky, A., Ed., Springer, Cham, Switzerland, 2017, pp. 453 - 465.

Akl, S.G. and Salay, N., "On computable numbers, nonuniversality, and the genuine power of parallelism", Chapter Four in: Emergent Computation: A Festschrift for Selim G. Akl, Adamatzky, A., Ed., Springer, Cham, Switzerland, 2017, pp. 57 - 69.

Akl, S.G. and Nagy, M., "Introduction to Parallel Computation", in: Parallel Computing: Numerics, Applications, and Trends, Trobec, R., Vajtersic, M., and Zinterhof, P., Eds., Springer-Verlag, London, 2009; Chapter 2, pp. 43 - 80.

Akl, S.G. and Nagy, M., "The Future of Parallel Computation", in: Parallel Computing: Numerics, Applications, and Trends, Trobec, R., Vajtersic, M., and Zinterhof, P., Eds., Springer-Verlag, London, 2009; Chapter 15, pp. 471 - 510.

Akl, S.G., "Evolving Computational Systems", in: Parallel Computing: Models, Algorithms, and Applications, Rajasekaran, S. and Reif, J.H., Eds., Taylor and Francis, CRC Press, Boca Raton, Florida, 2008; Chapter 1, pp. 1 - 22.

Akl, S.G., "Conventional or Unconventional: Is Any Computer Universal?", in: From Utopian to Genuine Unconventional Computers, A. Adamatzky and C. Teuscher, Eds., Luniver Press, Frome, United Kingdom, 2006, pp. 101 - 136.

Akl, S.G., "The Myth of Universal Computation", in: Parallel Numerics, Trobec, R., Zinterhof, P., Vajtersic, M., and Uhl, A., Eds., Part 2, Systems and Simulation, University of Salzburg, Salzburg, Austria and Jozef Stefan Institute, Ljubljana, Slovenia, 2005, pp. 211 - 236.

Invited Lecture

S.G. Akl, Ubiquity and simultaneity: The science and philosophy of space and time in unconventional computation, Keynote address, Conference on the Science and Philosophy of Unconventional Computing, The University of Cambridge, Cambridge, United Kingdom, 2009.

This project is concerned with computations whose characteristics are akin to certain unique phenomena that occur in different domains of science. We are particularly interested in systems whose parameters are altered unpredictably whenever one of these parameters is measured or modified. Examples of such computational environments include those in which Heisenberg's uncertainty principle of quantum physics is witnessed, or those in which Le Chatelier's principle of chemical systems under stress manifests itself. A study of these systems uncovers computations that are inherently parallel in the strong sense, meaning that they are efficiently executed in parallel, but impossible to carry out sequentially. For details, please see:

The prevalent paradigm of computation, to which everyone who uses computers is accustomed, is one in which all the data required by an algorithm are available when the computer starts working on the problem to be solved. A different paradigm is real-time computation. Here, not all inputs are given at the outset. Rather, the algorithm receives its data (one or several at a time) during the computation, and must incorporate the newly arrived inputs in the solution obtained so far. Often, the data-arrival rate is constant; specifically, N data are received every T time units, where both N and T are fixed in advance.

A fundamental property of real-time computation is that certain operations must be performed by specified deadlines. Consequently, one or more of the following conditions may be imposed:

Our work shows that within the paradigm of real-time computation, some classes of problems have the property that a solution to a problem in the class, when computed in parallel, is far superior in speed and quality to the best one obtained on a sequential computer. Recent results are described in the following papers:

There are two aspects to this work:

The aim of this project is to design a computational solution to the problem of multiuser detection and interference cancellation in wireless cellular basestations. This approach would allow all users in a cellular radio system wishing to communicate to be detected simultaneously at the base station. An important component of the project is the analysis and implementation of parallel algorithms for solving large systems of linear equations. (This is joint work with P.J. McLane, N. Majikian, and V.C. Hamacher of the Department of Electrical and Computer Engineering, and is funded by CITO, the Communications and Information Technology Ontario centre of excellence.)

Broadcasting with selective reduction (BSR) is a model of parallel computation. It is an extension of the Parallel Random Access Machine (PRAM), the most popular model of parallel computation. It supports all form of memory access allowed by the PRAM, that is, Exclusive Read (ER), Exclusive Write (EW), Concurrent Read (CR), and Concurrent Write (CW), with an additional BROADCAST instruction which allows all N processors to gain access to all M memory locations simultaneously for the purpose of writing. It has been shown that while an implementation of BSR requires no more resources than the weakest variant of the PRAM, namely, the EREW PRAM, BSR is more powerful than the strongest variant of the PRAM, namely, the CRCW PRAM.

The BROADCAST instruction consists of three phases: broadcasting, selection, and reduction. The broadcast phase allows each of the N processors to write a record concurrently to all of the M memory locations. Each processor's record contains two fields, a tag and a datum, where the tag helps identify those locations in which the datum is to be stored. At the receiving end, each memory location selects a subset of the receiving data by comparing the tag value of the datum with a limit value, associated with that memory location, using a selection rule. In the last phase, the data selected by each memory location are reduced to a single value using a binary associative reduction rule, and this value is finally stored in that memory location.

Many BSR algorithms have been designed to solve different traditional problems. For all the computational problems addressed so far, constant time solutions have been found using the BSR model These solutions illustrate the power and elegance of the model.

There are a number of fundamental constraints which bound bus interconnections in parallel (electronic) systems in general: limited bandwidth, capacitive loading, and cross-talk caused by mutual inductance. Another limitation concerns the classical assumption of exclusive access to the bus resources, which limits throughput to a function of the end-to-end propagation time. Optical communications have emerged as an alternative solution to these problems. Unlike the signal propagation in electronic buses, which is bidirectional, optical channels are inherently directional and have predictable delay per unit length. This allows a pipeline of signals to be created by the synchronized directional coupling of each signal at specific locations along the channel. The possibility in optics to pipeline the transmission of signals through a channel provides an alternative to exclusive bus access. Using this kind of spatial parallelism the end-to-end propagation latency can be amortized over the number of parallel messages active at the same time on the bus.

We have introduced a computational model which incorporates some of the advantages and characteristics of two existing models, namely reconfigurable meshes and meshes with optical buses. It consists of a reconfigurable array which uses optical pipelined buses for communication (AROB). This model extends the capabilities of the arrays with optical pipelined communications, by using more powerful switches and different reconfiguration rules. Some of the results obtained for the AROB model also hold for other types of arrays with optical pipelined buses, thus extending the results previously obtained for these models, and making it possible to better understand the implications of using optical interconnections for massively parallel processing.

The new model is shown to be extremely flexible, as demonstrated by its ability to efficiently simulate different variants of PRAMs, bounded degree networks and reconfigurable networks. A number of applications of the AROB are presented, and its power is investigated. Our initial investigations revealed that this architecture is suitable for massively parallel applications in different areas as low level image processing (Hough transform, distance transforms), sparse matrix operations (multiplications, transpose), etc.

As future work we intend to study the algorithmic implications of using this model to solve different problems which arise in communications, digital signal processing and on-board satellite data processing.

With the advances being made in optical engineering, we are seeing many architects adapting traditional interconnection models to take advantage of the new technology. The mesh and lattice networks are well-studied architectures, for which there exist many useful algorithms, but depending on the communication patterns dictated by the computation, the performance can be limited by their large diameter.

The optical hypermesh is a mesh-based interconnection which uses fiber-optic multichannel switches to provide a communication path between nodes aligned along a particular dimension. Compared to a hypercube of similar size, the hypermesh matches the O( 1 ) cost for permutations over nearest neighbours, while maintaining a significantly smaller diameter and a reduced physical interconnection complexity.

Many optimal algorithms have been found on the Hypercube network. A hypercube of degree n has n edges incident at each vertex. The nth dimension link joins a vertex u to v where v differs from u in the nth bit of the binary representations. An attractive alternative to the Hypercube with smaller degree and diameter was proposed by Akers, Harel and Krishnamurthy in 1986 called the Star. An n-Star (Sn) has n! vertices, labelled by the n! permutations of the set {1,2,..,n}. It has n-1 links incident to each vertex, and each neighbour of vertex u along dimension d, 2<=d<=n, swaps the first and the dth symbols in the label of u. The Pancake model (Pn) is similar to the Star, except the vertices adjacent to u along dimension d are labelled by "flipping" the first d symbols of u. Currently, the best known algorithm for sorting on the n-Star has time complexity O(n^3 log n). Sequentially, sorting can be done in O(n!logn!) time, so to have an optimal cost, a star with n! processing elements should take O( logn! ) = O( nlogn ) time. Further reseach into achieving cost-optimal algorithms will include investigation of embedding known networks such as the hypercube and mesh into the Star and Pancake, which are inter-embeddable with a dilation cost of O( n ), i.e. the maxium shortest distance between f(x) and f(y) where f is the emedding from vertices of Sn (or Pn) to vertices of Pn (or Sn) and (x,y) is an edge of Sn (or Pn). The exact diameter of the Pancake is not known and will be investigated.

Several efficient parallel algorithms were recently developed for problems defined on proper circular arc graphs. These include:

The role of computers in society is expanding at a rapid pace and is not restricted to the bounds set out by the theory of parallel computation as it is currently formulated. In particular, dynamic computers which interact with their environment do not conform to the limitations of the speedup and slowdown (or Brent's) theorems. The phenomenon of a disproportionate decrease in execution time of p over q processors, for p > q, is referred to as superunitary speedup. An analogous phenomenon, called superunitary 'success ratio' occurs in dealing with tasks that can either succeed or fail, when there is a disproportaionate increase in the success of p over q processors executing a task. A range of conditions were identified which lead to superunitary speedup of superunitary 'success ratio'. Furthermore, several new paradigms for problems which admit such superunitary behavious were proposed. These results suggest that a new theory of parallel computation may be required to accomodate the new paradigms.

Broadcasting with Selective Reduction (BSR) is a model of parallel computation in which N processors share M memory locations. During the execution of an algorithm several processors may read from, or write to, the same memory location simultaneously, such that each processor gains access to at most one location. An additional type of memory access is also permitted in BSR by means of which all processors may gain access to all memory locations at the same time for the purpose of writing. At each memory location, a subset of the incoming broadcast data is selected (according to an appropriate selection criterion) and reduced to one value (using an appropriate reduction operator): this value is finally stored in the memory location. It has been shown that BSR, while more powerful that the strongest variant of the Parallel Random Access Machine (PRAM), namely the Combining Concurrent Read Concurrent Write PRAM, demands no more resources to be implemented than the PRAM's weakest variant, namely the Exclusive Read Exclusive Write (PRAM). Two contributions were made recently:

A number of interconnection networks for parallel computers are studied. These networks include, for example, the star, the generalized hypercube, and the multidimensional torus. The following results are obtained for these networks:

In addition, a general framework was derived for communication on a subclass of Cayley graph based networks.

The star network has received considerable attention recently as an attractive topology for interconneting processors in a parallel computer. Investigation of this network has led to the following results:

Optimal parallel algorithms, designed to run on the EREW PRAM model of computation, were obtained for solving the following problems:

Return to Parallel Computation Group Home Page