Introduction

During tele-communication and tele-work, it is important to know not only who is working with whom, but also who is working on what. One of the most prominent usability problems of current tele-communication and tele-work environments is the (lack of) support for representing these joint attention states. This has prompted researchers, mainly in the fields of Human-Computer Interaction (HCI) and Computer Supported Cooperative Work (CSCW), to focus on aspects of

awareness

in communication and cooperative work. However, proposed solutions often require a very explicit management of communication flow, or cause a considerable increase in network load and consequently do not scale up well.

More specifically, visual attention is a very important element of a participant's general state of attention. When measuring the locus of visual attention, gaze direction is the only reliable indicator. The design of systems which convey gaze direction has been complicated by the lack of an appropriate input device, which has resulted in intricate system setups involving multiple cameras and large flat-panel displays, or problematic mappings of human sensory and effector channels due to the use of substitute devices such as the mouse to convey visual attention. However, a new generation of eye-tracking devices has emerged which satisfy the above criteria and allow for acceptable measurement of gaze direction without restraining head movement.

An Overview of the Project

In this project, GAZE, a prototype multi-party conferencing and collaboration system is designed and implemented. In this system, communication flow will be directed according to visual attention, as conveyed by a wide range of possible input device modules, from mouse to eyetracker. The design will focus on the development of interaction styles and the representation of attention in different interaction modalities such as video communication, audio communication, textual communication, and the editing of different types of shared documents. Attention information will also be used to regulate quality of service of the various media.

Implementation

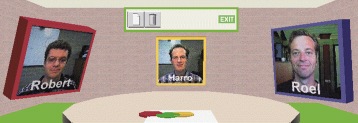

We have developed a first prototype of the GAZE Groupware System. The conferencing component uses a 3D meeting room metaphor, in which participants are represented by an image, mapped onto a 3D surface which rotates according to the gaze direction of the participant. The quality of this image will eventually range from still images with audio to H320-compliant full-motion video. Lightspots on a shared table, and in documents, indicate where others are working (See Figure). The implementation is based on the VRML 2.0 specifications, with behaviour provided by JAVA. The Sony Community Place Bureau is the first server to implement shared behaviour in multi-user worlds, and its extensions to VRML 2.0 and java libraries are used as a basis for the first prototype. The Sony Community Place Browser is used as a client.

We have developed a first prototype of the GAZE Groupware System. The conferencing component uses a 3D meeting room metaphor, in which participants are represented by an image, mapped onto a 3D surface which rotates according to the gaze direction of the participant. The quality of this image will eventually range from still images with audio to H320-compliant full-motion video. Lightspots on a shared table, and in documents, indicate where others are working (See Figure). The implementation is based on the VRML 2.0 specifications, with behaviour provided by JAVA. The Sony Community Place Bureau is the first server to implement shared behaviour in multi-user worlds, and its extensions to VRML 2.0 and java libraries are used as a basis for the first prototype. The Sony Community Place Browser is used as a client.

Eyetracking Technology

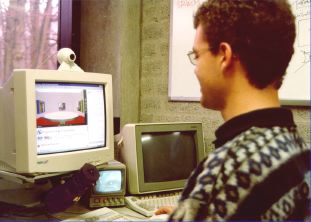

The GAZE Groupware System knows where participants are looking by means of the LC Technologies Eyegaze System pictured below. The Eyegaze system delivers realtime Point-of-Gaze coordinates to a host computer running the groupware system. The use of the eyetracking system is transparant to the user, although provisions are being taken to allow for privacy control.

Left: Gaze Groupware System with Eyegaze System.

Right: Eyegaze camera with infra-red LED.

Presentation

The GAZE Groupware System was first shown to the public at

ACM'97 Expo

: "The Next 50 Years of Computing" March 1-5 1997, San Jose, California, in celebration of the first computing society's 50th anniversary.

Contact

Dr. Roel Vertegaal

CISC Dept.

Queen's University

Canada

You are visitor number: